Data protection measures are only as strong as their ability to outsmart increasingly advanced technology and bad actors, while still preserving the utility and value of the underlying data. With it taking an average of nearly 300 days to catch a data breach, being proactive about how data is protected is more important than ever – especially when the only alternative is damage control.

As cloud technology has become more sophisticated, data teams are better able to put safeguards in place to mitigate risk of unauthorized use. In fact, the array of choices regarding how to protect data has grown such that it can be confusing to know which is right for an organization’s specific types of data and use cases. Two options that are widely popular – yet often confused – are data tokenization and data masking.

In this blog, we’ll distinguish between the two approaches and clarify which to use based on your data needs.

What Is Data Tokenization?

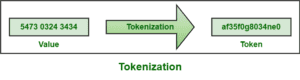

What is data tokenization? Data tokenization describes the process of swapping sensitive data with a randomly generated string, known as a token. Since tokens are non-sensitive placeholders that lack inherent meaning, they cannot be reverse-engineered by anyone or anything other than the original tokenization system (this process is called detokenization). In this sense, tokenization differs from encryption, which can be undone if the encryption key is known. Therefore, tokenization is in many ways a stronger means of protection for sensitive personal data, like social security numbers and bank account numbers.

Below is a simple graphic showing how tokenization works.

[Read More] What Is Data Tokenization?

What Is Data Masking?

Data masking alters sensitive data to create a fake but realistic version of the original data. Also known as data obfuscation, this approach protects sensitive information while ensuring it remains structurally similar to the real data and – most importantly – usable. Still, data masking should eliminate the risk of re-identification, and therefore must take into account both direct and indirect identifiers. As organizations adopt multiple cloud data platforms, it’s critical that data masking policies are consistently and dynamically applied so as to mitigate potential points of failure.

There are two data masking methods: static, which prevents any user from viewing the original data once it has been masked; and dynamic, which prevents access for unauthorized users but permits it for those with the right clearance. Regardless of which method is used, there are a range of different data masking techniques, including tokenization. Other techniques include:

Below is an example of data masking using redaction.

[Read More] What Is Data Masking?

What’s the Difference Between Tokenization and Data Masking?

If tokenization is a subset of data masking, what’s the difference between the two? The primary distinction is that data masking is primarily used for data that is actively in use, while tokenization generally protects data at rest and in motion. This makes data masking a better option for data sharing with third parties. Additionally, while data masking is irreversible, it still may be vulnerable to re-identification. Tokenization, meanwhile, is reversible but carries less risk of sensitive data being re-identified.

Between the two approaches, data masking is the more flexible. Not only does it encompass a broad range of techniques, as outlined above, but those techniques can be dynamically applied at query runtime using fine-grained access controls. With tokenization, there is a one-to-one relationship between the data and the token, which makes it inherently more static.

The table below highlights some of the key differences between data masking and tokenization:

| Data Masking |

Data Tokenization |

| Process of hiding or altering data values |

Process of replacing data with a random token |

| Can be dynamically applied through data access policies |

Applied on a 1:1 basis using a tokenization database |

| Cannot be reversed |

Can be reversed using the system that generated the token |

| Optimal for data actively in use |

Optimal for data at rest or in motion |

| Works for structured and unstructured data |

Works best for structured data |

| Some risk of re-identification |

Minimal risk of re-identification |

| Good for data sharing, testing, and development |

Good for securing sensitive data at rest, e.g. credit card or social security numbers |

When Should I Use Tokenization vs. Data Masking?

The nuances between data tokenization and masking can make it complicated to distinguish when to use each approach, particularly since tokenization is a form of data masking. Ultimately, the answer comes down to the type of environment in which sensitive data is most used, the regulations the data is subject to, and the potential points of failure that could allow a breach to occur.

With these factors in mind, there are a few specific scenarios when you should consider one over the other.

When To Use Data Tokenization

Data tokenization is highly useful for protecting structured data and personally identifiable information (PII) like credit card numbers, social security numbers, medical records, account numbers, email or home addresses, and phone numbers. Assuming the tokenization system is properly safeguarded and stored separately from the data, replacing this type of information with a random string of numbers, letters, or symbols eliminates the possibility of malicious actors seeing the actual values and simplifies compliance with data compliance laws and regulations.

Because tokenization is optimal for data at rest or in motion, it is often used in e-commerce and financial services to achieve Payment Card Industry Data Security Standard (PCI DSS) compliance. The PCI Standard is mandated by credit card companies and applies to any organization that handles credit card information, including processing, storage, and transmission. Like the GDPR’s anonymization standard, tokenizing data generally makes it exempt from the compliance requirements dictated in the PCI DSS 3.2.1. Therefore, tokenization simplifies the compliance process while giving customers peace of mind that their card information is private and secure. As mediums like e-commerce, mobile wallets, crowdfunding sites, and cryptocurrency continue to become more mainstream, it’s safe to assume tokenization will remain a reliable method for securing sensitive data.

While protecting financial data is a common use case for tokenization, the technique is also implemented by data teams in the medical, pharmaceutical, and real estate industries, to name a few. Data tokenization can also help enforce the principle of least privilege, which asserts that just the minimum data required to complete a specific task should be accessible to the people who need it. Because only those with access to the tokenization system can detokenize data, this is a sound way to control what data is discoverable and accessible, particularly in decentralized architectures like data lakes and data mesh. However, as previously mentioned, the one-to-one relationship between data assets and tokens makes tokenization a less scalable approach than data masking.

When To Use Data Masking

Like tokenization, data masking is typically implemented to secure PII and protected health information (PHI). However, while data masking can be applied to many of the same types of data, it is primarily applied for data that is in use. Therefore, it is effective for testing and development environments, as well as data sharing.

Since data masking creates a realistic fake version of data, it is a convenient approach for secure software development and testing in non-production environments. This is because the masked data effectively mimics sensitive data, without the risks associated with using the real thing. This use case primarily benefits internal teams – but data masking also helps overcome barriers to data sharing with third parties. Data owners create and enforce masking policies that control who can see what data, so external users can see only the data to which they are authorized. Moreover, when implemented using attribute-based access control (ABAC), these dynamic policies are highly scalable and can be applied consistently across cloud data platforms.

The continued proliferation of data use rules and regulations, including GDPR, HIPAA, and CCPA, compels organizations to incorporate data masking capabilities into their tech stack. These legislations explicitly call for PII, PHI, and other sensitive data to be adequately protected from unauthorized access. Masking allows data teams to achieve regulatory compliance without locking down data entirely, which would negate its value. Therefore, data masking is an important mechanism for maximizing data’s utility and privacy.

[Read More]What Are Data Masking Best Practices?

Implementing Tokenization and Data Masking for Modern Cloud Environments

The widespread use of sensitive data, and restrictions dictating how and why it can be accessed, necessitate advanced data security techniques like tokenization and data masking. However, without the right resources in place, managing tokens and data masking policies while scaling data use can put a high tax on data engineering teams’ time and productivity.

The Immuta Data Security Platform helps avoid this by automatically discovering, securing, and monitoring organizations’ data, allowing data teams to easily create and enforce dynamic data masking controls at scale. By separating policy from platform, Immuta acts as a centralized access control plane from which to apply attribute- and purpose-based access control (PBAC) consistently across all cloud platforms in the data stack. With these fine-grained access controls, data platform teams can implement advanced data masking techniques that simplify compliance with any rule or regulation, and can easily monitor data access and use for auditing purposes. Immuta’s flexible, scalable data access capabilities have allowed organizations worldwide to reduce their number of policies by 75x, while accelerating speed to data access by 100x.

To see for yourself how easy it is to implement data access control using Immuta, check out our walkthrough demo.

Data Masking 101: A Comprehensive Guide