“The emergence of generative AI and GPT technologies has brought about new concerns regarding data privacy and security.”

Diego Souza, Chief Information Security Officer at Cummins

Nearly 700 data leaders share how AI impacts their approach to data security and governance.

Introduction & Executive Summary

Compliance, privacy, and ethics have always been make-or-break mandates for enterprise organizations. But the stakes have never been higher than they are right now: Generative AI is allowing companies to test new limits and innovations, faster than ever.

For all of the innovation and potential that generative AI brings, it also presents a world of uncertainty and security risk: 80% of data experts agree that AI is making data security more challenging.

In their eagerness to embrace large language models (LLMs) and keep up with the rapid pace of adoption, employees at all levels are sending vast amounts of data into unknown and unproven AI models. The potentially devastating security costs of doing so aren’t yet clear.

In our 2024 State of Data Security Report, 88% of data leaders said employees at their organization were using AI, whether officially adopted by the company or not. Yet they expressed widespread concern about sensitive data exposure, training data poisoning, and unauthorized use of AI models. As adoption speeds up, these risks will only become more pressing.

As fast as AI is evolving, standards, regulations, and controls aren’t adapting fast enough to keep up. To manage the risks that come with this sea change, organizations need to secure their generative AI data pipelines and outputs with an airtight security and governance strategy.

We asked nearly 700 engineering leaders, data security professionals, and governance experts for their outlook on AI security and governance. In this report, we cover their optimism around the technology, the security challenges they’re facing, and what they’re doing to adopt it.

Key Insights

01 AI Is Making Data Security More Challenging

Organizations can’t adopt AI fast enough. The hype and excitement around it are too tempting to resist, which explains why more than half of data experts (54%) say that their organization already leverages at least four AI systems or applications. More than three-quarters (79%) also report that their budget for AI systems, applications, and development has increased in the last 12 months.

But this fast-paced adoption also carries massive uncertainty. AI is still a black box when it comes to security and governance.

Leaders cite a wide range of data security threats with AI and LLMs:

- 55% say inadvertent exposure of sensitive information by LLMs is one of the biggest threats.

- 52% are concerned about inadvertent exposure of sensitive information to LLMs via user prompts.

- 52% worry about adversarial attacks by malicious actors via AI models.

- 57% say that they’ve seen a significant increase in AI-powered attacks in the past year.

02 Confidence in AI Adoption & Implementation Is High

Despite all of the security and governance risks, data leaders are highly optimistic about their security strategy — raising potential concerns that they are overconfident:

- 85% say they feel confident that their data security strategy will keep pace with the evolution of AI.

- 66% rate their ability to balance data utility with privacy concerns as effective or highly effective.

To keep pace with the evolution of AI, data leaders must look at how they can scale and automate data security and governance, and give a strong voice to those teams responsible for managing risk in the organization.

03 Policies and Processes Are Changing

With new technology comes new responsibilities — and new security, privacy, and compliance risks. In response to the growth of AI adoption, IT and engineering leaders are adapting organizational governance standards, with 83% of respondents reporting their organization has updated its internal privacy and governance guidelines:

- 78% of data leaders say that their organization has conducted risk assessments specific to AI security.

- 72% are driving transparency by monitoring AI predictions for anomalies.

- 61% have purpose-based access controls in place to prevent unauthorized usage of AI models.

- 37% say they have a comprehensive strategy in place to remain compliant with recent and forthcoming AI regulations and data security needs.

04 Data Experts Are Forward-Facing

While it’s easy to worry about the challenges that AI adoption presents, many data leaders are also very excited about how the technology will help improve security and governance. From the ability to adopt new tools, to unlocking new ways of automating data management processes and implementing better safeguards, they are looking to the future with optimism.

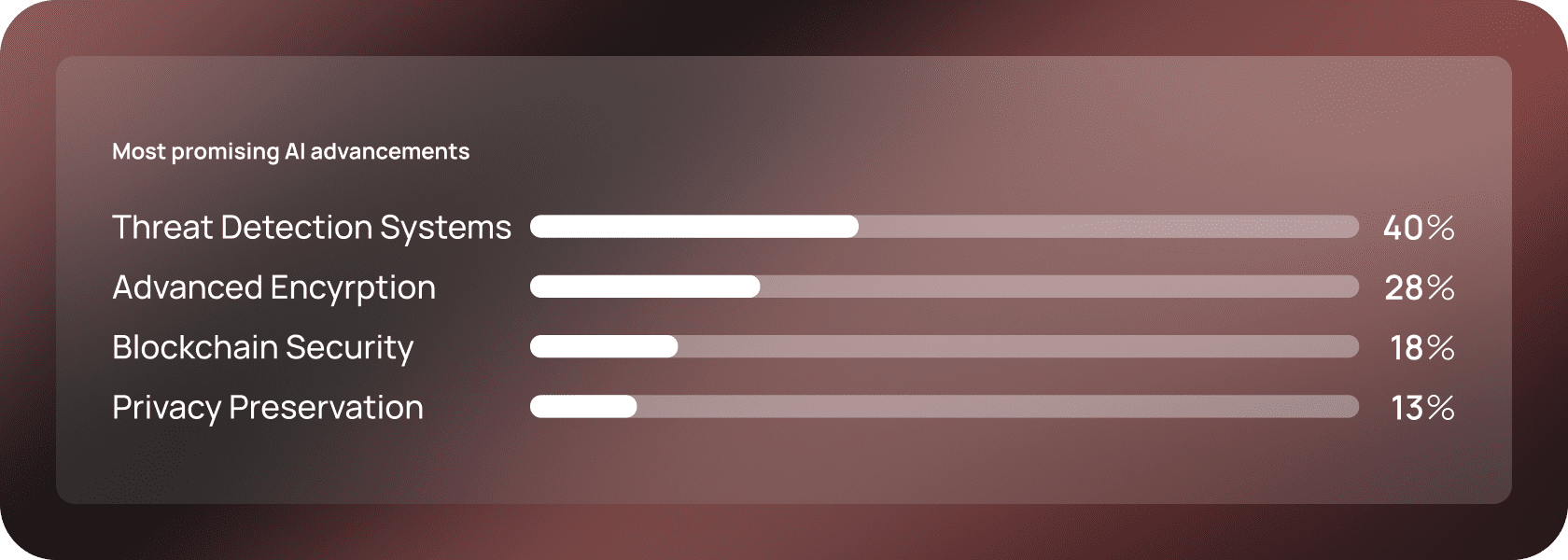

Data leaders believe that one of the most promising AI security advancements is AI-driven threat detection systems (40%). This makes sense, given how AI and machine learning are able to automate processes and quickly analyze vast data sets. Another auspicious advancement is the use of AI as an advanced encryption method (28%) that can help generate robust cryptographic keys and optimize encryption algorithms.

Data leaders are also interested in the potential for AI to serve as a tool in service of data security. Respondents say that some of the main advantages of AI for data security operations will include:

- Anomaly detection (14%)

- Security app development (14%)

- Phishing attack identification (13%)

- Security awareness training (13%)

Methodology

Immuta commissioned an independent market research agency, UserEvidence, to conduct the 2024 AI Security and Governance Survey.

AI Security and Governance Survey.

The study surveyed 697 data leaders and professionals from the US, UK, Canada, and Australia. Respondents represent global cloud-based enterprise companies across public and private sectors, with the majority (57%) in the technology sector. Other sectors surveyed include:

- Manufacturing

- Financial services

- Business and professional services

- Public sectors, such as government

- Private healthcare, including pharma

- Several other sectors

Over a third of respondents (34%) are senior leaders or C-level executives, and more than a quarter of respondents (28%) are mid-level managers.

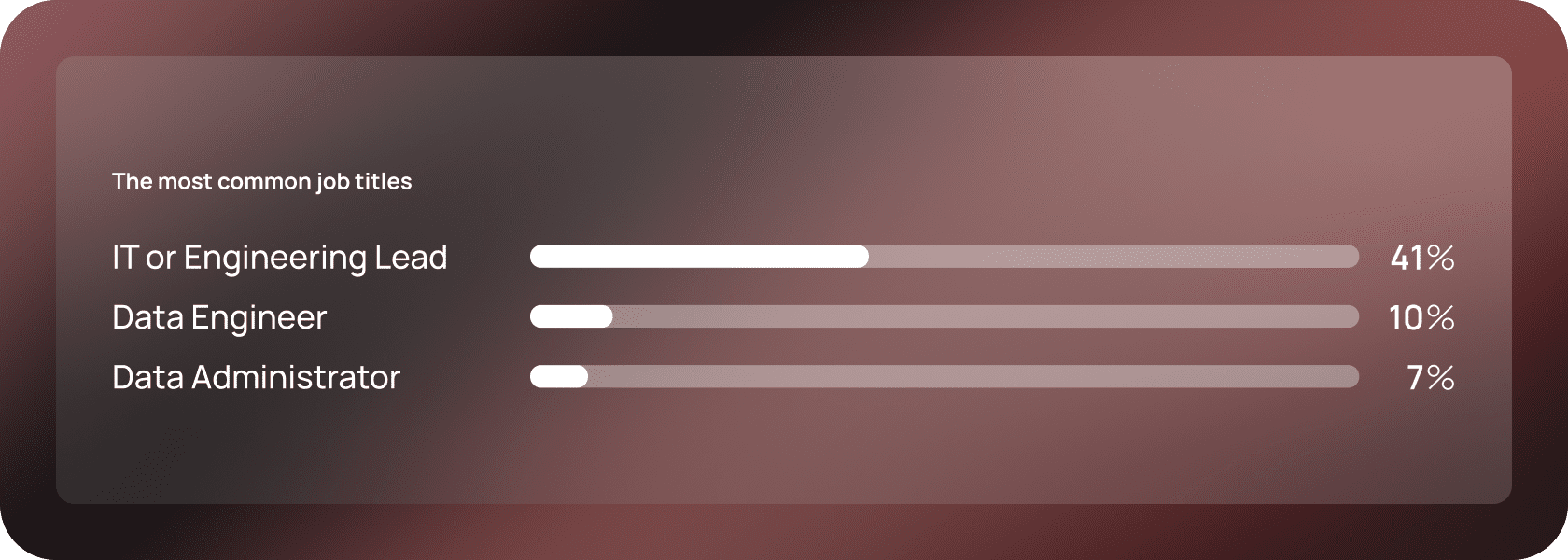

All respondents use data analytics, governance, or transformation tools in their roles, with 76% regularly using those tools at their organization. The most common job titles included:

01 AI Is Making Data Security More Challenging

The latest wave of generative AI advancements unlocks new levels of efficiency, productivity, and insights — yet these outcomes come at a cost, specifically to data security.

It bears repeating: 8 out of 10 respondents agree that AI is making data security more difficult.

When users feed data into public LLMs, it leaves their environment, and subsequently, their control. Many AI models use new information that’s inputted as source material. This presents a higher risk of attack and other cascading security threats.

Making matters more complex, most models are black box-oriented, so understanding the security impact of specific data lapses is incredibly difficult.

These models are very expensive to train and maintain and do forensic analysis on, so they carry a lot of uncertainty. We’re not sure of their impact or the scope.

Joe Regensburger, VP Research at Immuta

Rapid Adoption & Growing Pains

Exacerbating the security risks even more is the rapid pace at which companies are adopting AI to keep up with the competition and market. More than half of respondents (54%) say their organization already leverages at least four AI systems or applications.

The result of such fast-paced adoption is simple. Some companies are building homegrown solutions and smaller, proprietary LMs, though not as frequently. More commonly, the high cost of training and maintaining LLMs means most organizations are sending their data off to a third party, amortizing the cost across a large number of people by using a commercial model like OpenAI or Gemini.

However, the potential security impact of these models is much greater. Organizations don’t have an established relationship or degree of trust with these vendors, and they don’t know how the third parties will use their data. It’s far too easy to enter a prompt that includes sensitive data into ChatGPT’s open interface, even while AI remains largely unregulated.

All of this adds up to massive uncertainty. True AI security means answering questions like:

- Who’s using your data?

Where is your data going?

What is your data being used for after it leaves your environment?

Closer to home, incorporating AI into existing tech is its own challenge. Nearly half of data leaders (46%) rate the difficulty of integrating AI with legacy technologies as difficult or extremely difficult. Only 19% think it’s relatively easy.

The prompt engineering phase is vital for easing this transition. As more traditional databases start to implement a vector database infrastructure, users will be able to leverage unstructured text to search more efficiently. This will allow them to provide support materials specific to their organization, unlocking the niche knowledge models needed for better output.

To reduce hallucinations, it’s important to provide sufficient domain-specific context to each prompt.

Joe Regensburger, VP Research at Immuta

Integrating AI with legacy technologies brings its share of challenges. First and foremost is security: 27% of respondents point to data security concerns as the biggest challenge when integrating AI with legacy software. They also cite major integration challenges like employee skills gaps (24%), data quality and accessibility issues (15%), and ethical and regulatory compliance concerns (11%).

Threats Posed by AI

The number-one data security threat with AI and LLMs is inadvertent exposure of sensitive information by LLMs, with 55% of respondents agreeing it’s a significant risk. And at least half of data leaders are concerned about other key data security threats:

- Adversarial attacks by malicious actors via AI models (52%)

- Inadvertent exposure of sensitive info to LLMs via user prompts (52%)

- Use of purpose-built models for unauthorized tasks (52%)

- Shadow AI, or using AI in unsanctioned ways outside of IT governance (50%)

While the exposure of sensitive information by LLMs is a top-perceived threat, respondents rank adversarial attacks via AI models as a slightly bigger security threat overall. And attacks enabled by AI are also on the rise: Over half of respondents (57%) say that they’ve seen a significant uptick in AI-powered attacks in the past year.

While 53% of organizations acknowledge cybersecurity as a generative AI-related risk, according to McKinsey just 38% are working to mitigate that risk.

The negative outcomes from these attacks are steep. They range from exposure of sensitive data (48%) and proprietary information or IP (38%), to performance disruptions (40%) and system downtime (36%). Another 26% had to pay regulatory fines or penalties.

As much innovation and potential as AI offers, the technology can — and will continue to be — used for nefarious purposes. The effects of those attacks, in reputational damage, operational impacts, and financial penalties, to name a few, are a harsh reminder of AI’s shadow side.

Do the Right Thing With Ethical AI

Despite unfolding guidelines like the US AI Bill of Rights and the EU AI Act, AI regulation remains in its infancy. However, organizations still have a massive responsibility to define and uphold the ethics of their AI usage. This ranges from maintaining human oversight and remaining upfront about how LLMs are trained, to protecting users’ sensitive data from exposure and leaks.

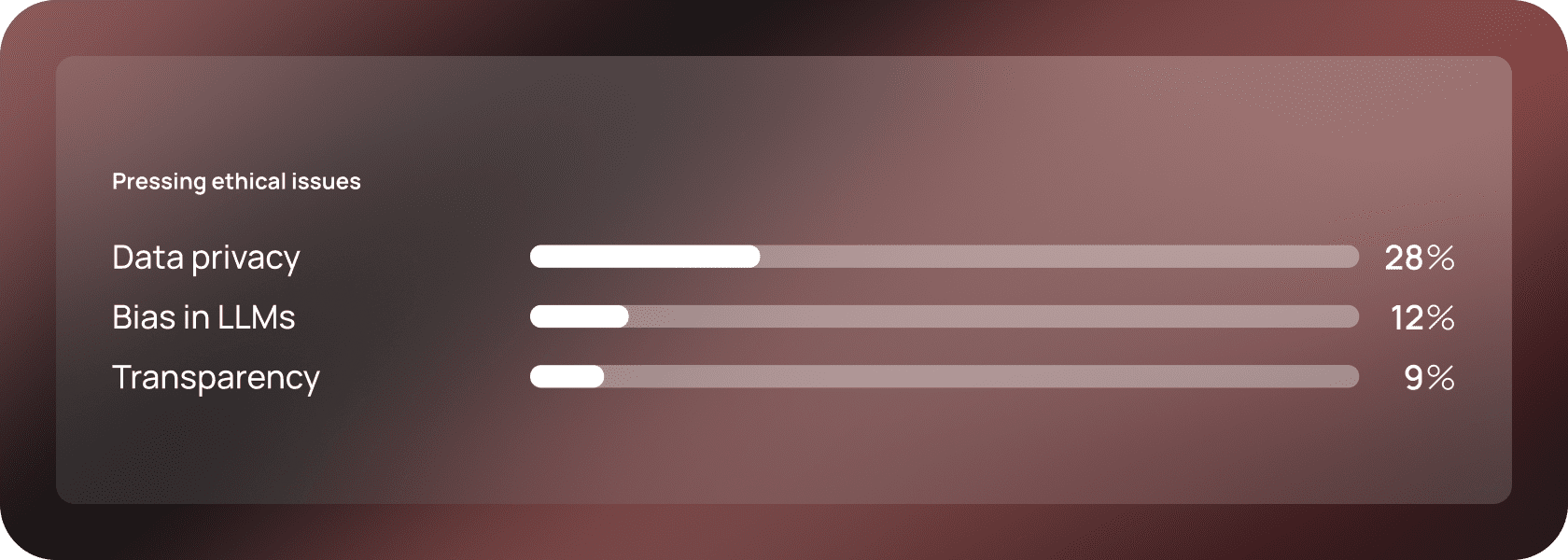

What is on data leaders’ minds when it comes to AI ethics? Close to half of respondents (48%) cite security as the most pressing ethical concern with AI. Other pressing ethical issues include:

Although only 12% of respondents rank bias as their most pressing ethical concern, data leaders across the board are thinking about it often. Around two-thirds (65%) say that AI bias is a moderate concern for them.

The security impacts of bias can be far-reaching. For instance, bias leaves organizations vulnerable when a model has a limited threat detection scope or overlooks risks — and the potential fallout is too great to ignore when data protection is at stake.

02 Confidence in AI Adoption & Implementation Is High

Despite its security challenges, most organizations are steadily throwing their weight — and spending — behind AI technology.

More than three-fourths of respondents (78%) say that their budget has increased for AI systems, applications, and development over the past 12 months. Companies anticipate significant business value through additional AI adoption, and they feel positive about their ability to adapt to the security challenges and changes ahead.

Strong Confidence in AI Data Security Strategies

Despite so many data leaders expressing that AI makes security more challenging, 85% say they’re somewhat or very confident that their organization’s data security strategy will keep pace with the evolution of AI. This is in contrast to research just last year that found 50% strongly or somewhat agreed that their organization’s data security strategy was failing to keep up with the pace of AI evolution.

In this survey, respondents expressed similar, but slightly lower, confidence in the intersection between using and protecting data in AI applications. Two-thirds (66%) of data leaders rated their ability to balance data utility with privacy concerns as effective or highly effective.

Much about AI security remains unknown, and businesses are still in a “honeymoon period” with the technology. New challenges and potential AI failures arise all the time, which are sure to prompt new regulations and standards. Even now, the EU’s AI Act enacts controls around not just the data that can go into a model but why someone can use a model — and purpose-based AI restrictions are a new challenge that organizations don’t yet know how to enforce.

So while data leaders may feel deep confidence in their AI data security strategy, it’s impossible to predict where regulations are headed in the future. Organizations should prepare and train their teams in line with the current state of AI regulations, and make their strategy agile enough to evolve as needed.

In the age of cloud and AI, data security and governance complexities are mounting. It’s simply not possible to use legacy approaches to manage data security across hundreds of data products.

Sanjeev Mohan, Principal at SanjMo

Standards & Frameworks

One primary contributor to the widespread confidence in AI security we’re seeing is a reliance on external standards and internal frameworks for both data governance and ethics.

More than half of data leaders (52%) say their organization follows national or international standards for governance frameworks, or guidelines around AI development. Slightly more (54%) say they use internally developed frameworks.

These industry standards are useful reference points, yet they’re also as new as the recent AI advancements themselves. Organizations need to incorporate these standards into their governance frameworks while taking their own proactive steps to address AI security risks internally.

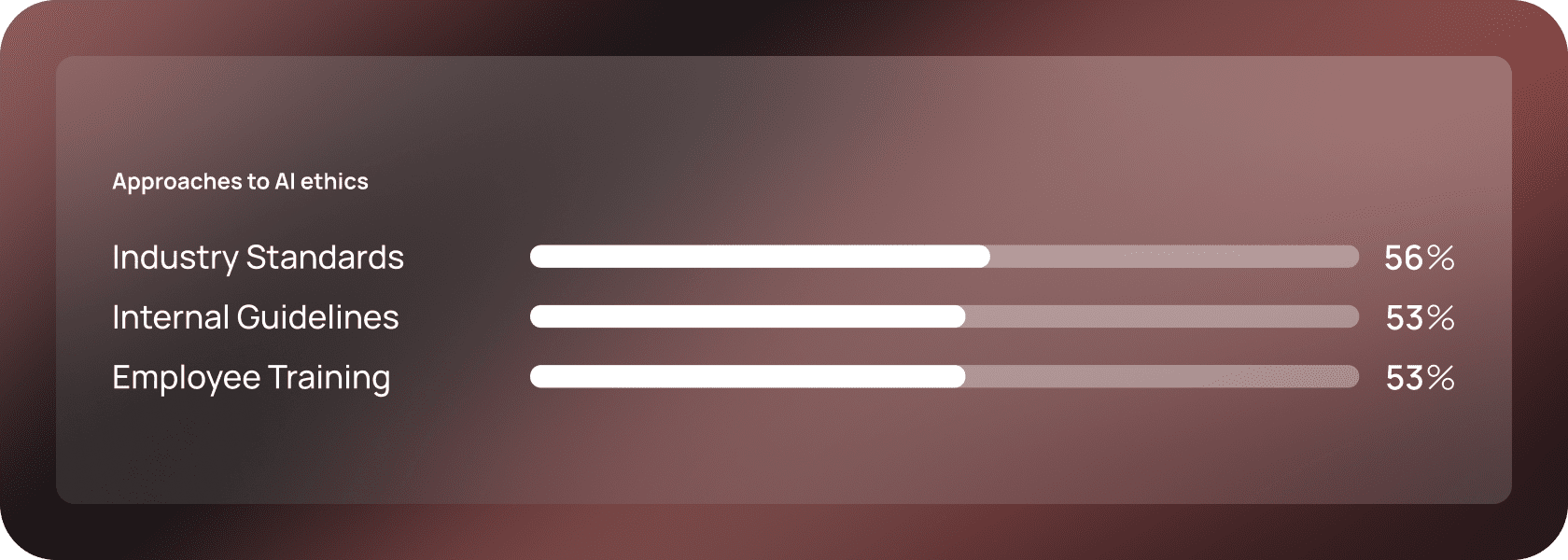

Data experts are similarly using a mix of approaches to AI ethics, with the largest proportion (56%) following industry standards. They’re also setting specific internal ethical guidelines or policies (53%) and conducting regular ethics training and awareness programs with employees (53%). This last approach is critical. Rather than setting and forgetting ethical guidelines for AI, leaders need to take an active role in defining and updating these policies — and training teams to abide by them.

Organizations need to create policies and structures that help them avoid major reputational backlash from ethical dilemmas, like using biased data to train recruiting tools or relying on non-transparent data collection and analytics.

Ethics poses a significant risk — one that requires an intentional and proactive approach to unknown challenges rather than a reactive one.

03 Policies and Processes Are Changing

The question marks around AI data security call for organizations to adjust their approach to privacy and compliance. A large majority are answering that call: 83% of respondents say that their organization has updated its internal privacy and governance standards in response to growing AI adoption.

In many cases, these changes aren’t mere updates. Companies are establishing AI policies and standards for the first time. The latest iteration of AI gives frontline employees an unprecedented ability to interact with and feed large volumes of data into LLMs — it’s uncharted territory, to say the least. This uncertainty is prompting organizations to enact new controls.

New Measures for New Adoption

Over three-fourths of data leaders (78%) say that their organization has conducted a risk assessment specific to AI security to identify potential weaknesses.

Risk is a multi-dimensional problem — it’s any undesired outcome with an impact. We have to assess privacy risk, confidentiality, and the risk of incorrect results all along the way.

Joe Regensburger, VP Research at Immuta

At a minimum, most data leaders are working to identify risks within their organization and technology. But that’s just the first step to mitigating them. What next steps are they taking to manage the risks they find?

To minimize bias, data leaders say that they’ve put measures in place, such as:

- Regular reviews of model predictions for evidence of bias (53%)

- Regular reviews of training data for evidence of bias (51%)

- Employment of AI fairness tools (39%)

These methods necessitate all-new processes and ongoing work for teams involved in AI security.

While AI transparency is not the most pressing ethical concern for data leaders, most are still taking steps to ensure that their models are transparent. Much like minimizing bias, transparency efforts involve processes to review AI output, including:

- Monitoring AI predictions for anomalies (72%)

- Requiring model cards that include details on how models have been trained (46%)

- Red team reviews of AI models to push them past guardrails and inform further training (32%)

Companies are also implementing policies to prevent the unauthorized use of AI models by employees — and most are looking to data access control and governance to enforce them:

- 61% use purpose-based access control for models

- 58% enforce model usage and persona monitoring

- 44% implement end-user license agreements

Our aim was to create more intelligent access control with greater efficiency. This was only possible through an advanced implementation of access control, which facilitates a higher degree of automation and transparency.

Vineeth Menon, Head of Data Lake Engineering at Swedbank

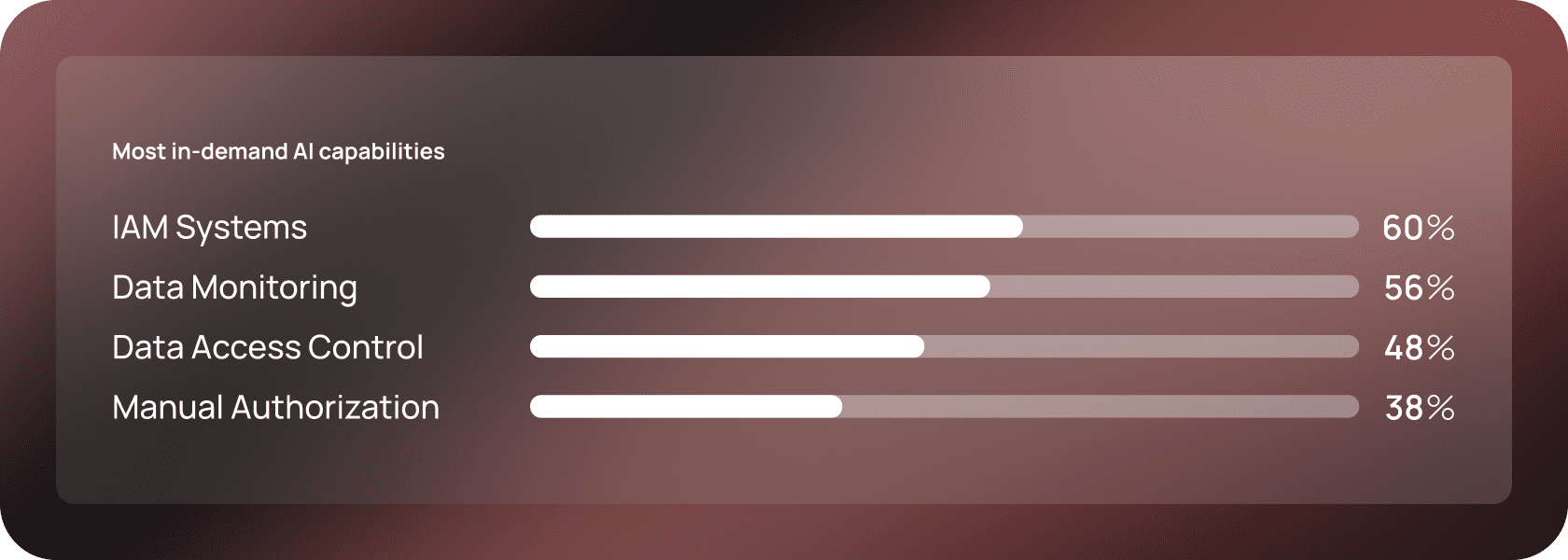

Along the same lines, data access management plays an essential role in mitigating the risk of shadow AI. Some of the most in-demand capabilities include:

Adjustments for Compliance

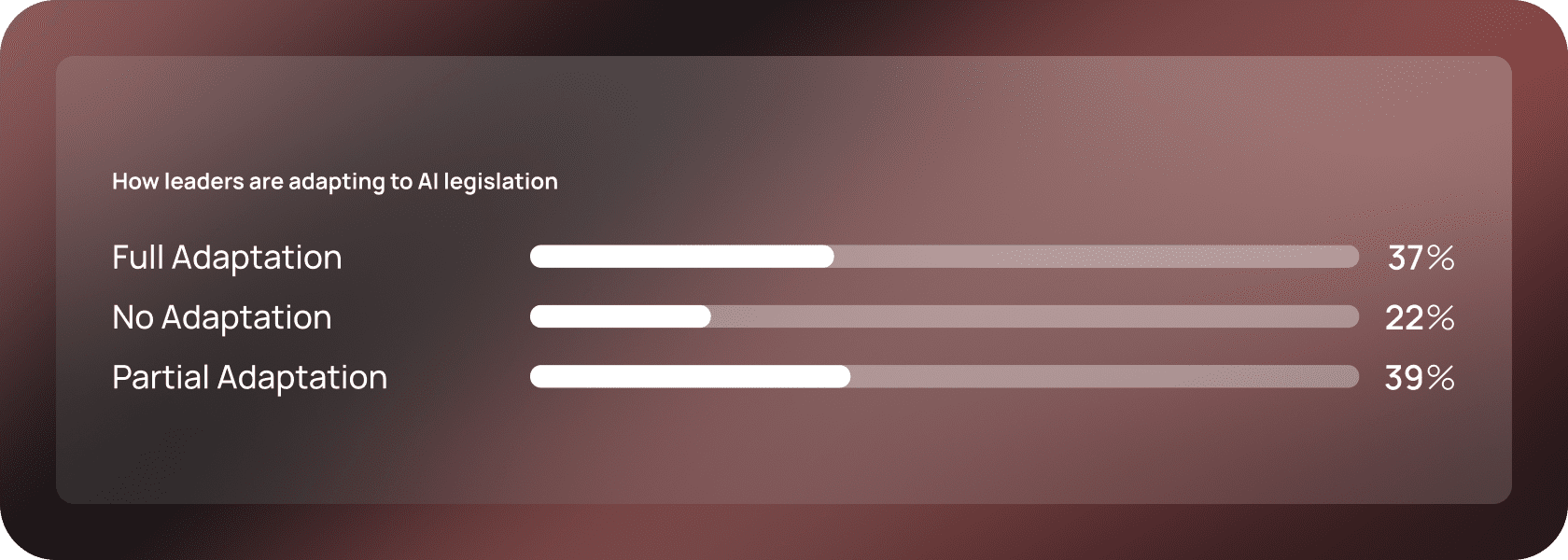

As the regulatory landscape develops, AI will undeniably bring dangers and challenges that organizations aren’t equipped to handle. The unknowns surrounding AI regulations may explain why data leaders are split on their responses to fluid and evolving legislation:

New AI regulations and laws are being developed across various jurisdictions, which will only continue as the technology advances further. The 63% of organizations that don’t have a comprehensive strategy for regulatory compliance may soon find AI regulations changing faster than they can adapt.

Regulations are not static. It may seem like compliance is going to be an easy piece, but that doesn’t necessarily address the unknowns that are going to come about. Regulations are still nascent in nature. It’s easy to comply in a vacuum, but that vacuum is going to be filled sooner than later.

Joe Regensburger, VP Research at Immuta

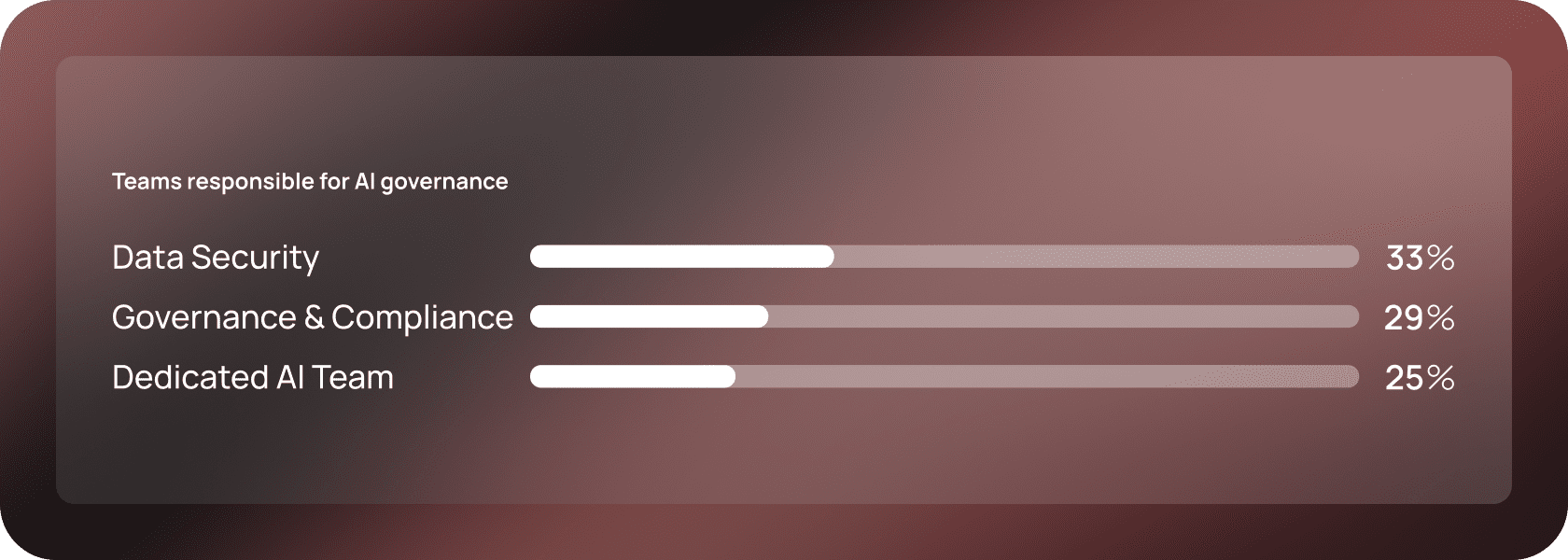

The Teams Responsible for New Policies

While AI can accomplish plenty — including facilitating security efforts — it can’t manage its own security. Organizations need people behind their policies and processes. Here are the teams data leaders say are responsible for their AI governance:

Ideally, those teams would handle every aspect of AI governance, including regulatory compliance. But in the real world, that’s typically not the case.

Compliance shouldn’t be the role of data engineers, architects, and scientists. But without the infrastructure for non-technical employees to enact controls, at least some accountability for data access policy enforcement tends to fall on engineers and architects, rather than solely compliance personnel.

Respondents say that data architects and engineers do play a role in regulatory compliance strategy: 36% say it’s a central role, while 49% say it’s supportive. This added responsibility makes their job less efficient overall. When organizations democratize compliance, they enable security experts — who aren’t engineers — to provide the context needed to give the right access to the right people.

The ideal case is that you let security and compliance personnel handle compliance, and allow engineers to do what they do best.

Joe Regensburger, VP Research at Immuta

Organizations are also pointing their teams’ time and attention toward ethics. Three-quarters (76%) say that they have a specific group or committee dedicated to overseeing ethical AI usage.

04 Data Experts Are Forward-Facing

These widespread changes to budgets, training, and policies all point to not just the changes AI is already bringing, but the innovation to come.

While most data leaders (66%) agree that privacy concerns have slowed or hindered the integration of AI applications within their organization, their outlook on data protection within AI models is positive:

- 80% agree their organization is capable of identifying and mitigating threats in AI systems

- 74% agree that they have full visibility into the data being used to train AI models

- 81% agree that their organization has updated its governance and compliance standards in response to AI

These assessments of risk, transparency, and compliance align closely with the confidence levels we noted earlier. And while a large majority of companies may have initially adapted, it’s less clear what they’ll have to do to stay up-to-date with data protection and security as both risks and regulations continue to evolve.

This is where the future of AI security — the tools and processes that protect AI systems from threats — is of critical importance. AI security is progressing as well, and data leaders say that these advancements are the most promising:

AI as a Tool For Data Security

AI innovation unlocks a wide range of potential security uses, and data leaders are split on what they think the biggest benefit will be for their organization.

When asked about the main advantage AI will have on data security operations, the two functions that rank highest are anomaly detection and security app development (both 14%). Respondents also think AI will help enable data security through:

- Phishing attack identification (13%)

- Security awareness training (13%)

- Enhanced incident response (12%)

- Threat simulation and red teaming (10%)

- Data augmentation and masking (9%)

- Audits and reporting (8%)

- Streamlining SOC teamwork and operations (8%)

In the future, AI is likely to be a powerful force for security. For instance, recent Gartner research found that by 2027, generative AI will contribute to a 30% reduction rate in false positive rates for application security testing and threat detection.

Yet in the meantime — at least through 2025 — Gartner advises security-conscious organizations to lower their thresholds for suspicious activity detection. In the short term, this means more false alerts and more human response, not less.

We don’t know what we don’t know about AI. The top priority in this stage of rapid evolution should be to reduce uncertainty: Uncertainty increases risk exposure, which in turn, drives up costs. Introduce guardrails to minimize hallucinations in AI outputs, vet the results, and assess any risks associated with the data you feed your LLMs.

AI Security and Governance in 2024 and Beyond

The rapid changes in AI are understandably exciting, but also unknown. This is especially true as regulations are fluid and many models lack transparency. Data leaders should pair their optimism with the reality that AI will continue to change — and the goalposts of compliance will continue to move as it does.

It won’t take much to cause regulations to change pretty quickly. The more we use AI, the more failures and biases within models will become very public. So I think there will be ongoing challenges with compliance, particularly as we start to see more and more failures.

Joe Regensburger, VP Research at Immuta

No matter what the future of AI holds, one action is clear: You need to establish governance that supports a data security strategy that isn’t static, but rather one that dynamically grows as you innovate.

At Immuta, we believe the future must be powered by the legal, ethical, and safe use of data and AI.

If you’re looking for a way to keep up with the current and upcoming AI landscape and de-risk your data, spend 29 minutes with Immuta.

Glossary

Data access governance / Data access management: Data access governance and data access management are often used interchangeably. Both terms refer to an organization’s policies and procedures that facilitate data access. There are particular models for data access, such as Role-Based Access Control (RBAC) and Attribute-Based Access Control (ABAC). This term refers to the implementation of those models and the management of the policies, including how they are stored, executed, and monitored.

Data mesh: Data mesh is a modern data architecture design that decentralizes data ownership, leaving data governance and quality in the hands of self-contained domains. Built to meet the needs of hybrid and multi-cloud data environments, data mesh reduces data silos and bottlenecks and puts data in the hands of those who need it.

Generative AI: Generative AI is a form of artificial intelligence that uses generative models to create and output various content types, including text, images, graphics, audio, and video.

Large Language Model: A Large Language Model (LLM) is a type of machine learning model known for its natural language processing (NLP) capabilities. LLMs are trained using massive data sets and can both understand humans and generate conversational responses to questions or prompts.

Machine Learning: Machine learning (ML) is a subset of artificial intelligence (AI) that uses data and algorithms to train machines to learn as humans do. ML plays an emerging role in the data science field, as algorithms learn to review large data sets then identify patterns, predict outcomes, and make classifications.

Red teaming: Red teaming is the practice of simulating an attack on an organization’s own infrastructure, systems, or applications to test how they would hold up against attackers. Red team reviews of AI models push past guardrails to identify vulnerabilities such as hallucinations in a model’s output and model biases.

Shadow AI: Shadow AI is the unauthorized use of AI that falls outside the jurisdiction of IT departments. A new iteration of “shadow IT,” shadow AI leads to security and privacy risks, such as leaking sensitive customer or corporate data.

Shift left: The shift left approach to security introduces security measures and testing early in the development cycle to avoid major delays or expensive errors due to security or compliance gaps.